Effective metadata management rs software require with robust functionality, enabling full capability for business and technical users

Introduction

Many government agencies and corporations are currently examining the metadata tools on the marketplace to decide which of these tools, if any, meet the requirements for their metadata management solutions. Often these same organizations want to know what types of functionality and features they should be looking for in this tool category. Unfortunately, this question becomes very complicated as each tool vendor has their own personalized “marketing spin” about which functions and features are the most advantageous. This leaves the consumer with a very difficult task, especially when it seems like none of the vendors’ tools fully fit the requirements that the metadata management solution requires. At EWSolutions, we have several clients that have these same concerns about the tools in the market.

Although I have no plans on starting a software company, I would like to take this opportunity to play software designer, and present my optimal metadata tool’s key functionality.

One of the challenges with this exercise is that metadata functionality has a great deal of depth and breath. Therefore, to categorize our tool’s functionality, I will use the six major components of a managed metadata environment (MME)

- Meta Data Sourcing & Meta Data Integration Layers

- Meta Data Repository

- Meta Data Management Layer

- Meta Data Marts

- Meta Data Delivery Layer

I will now walk through each of these MME components and describe the key functionality that my optimal metadata tool would contain.

Meta Data Sourcing & Meta Data Integration Layers

For simplicity’s sake, I will discuss this “dream” tool’s functionality for both the metadata sourcing and the metadata integration layers together. The goal of the metadata sourcing and integration layers is to extract the metadata from its source, integrate it where necessary, and to bring it into the Meta Data Repository.

Platform Flexibility

It is important for the metadata sourcing technology to be able to work on mainframe applications, distributed systems and from files (databases, files, spreadsheets, etc.) off a network, and from remote locations. These functions must be able to run on each of these environments so that the metadata could be brought into the repository.

Pre-built Bridges

Many of the current metadata integration tools come with a series of pre-built metadata integration bridges. The optimal metadata tool would also have these pre-built bridges. Where our optimal tool would differ from the vendor tools is that this tool would have bridges to all of the major relational database management systems (e.g. Oracle, DB2, SQL Server), the most common vendor packages, several code parsers (COBOL, JCL, C+, SQL, XML, etc.), key data modeling tools (ERWin, ERStudio, PowerDesigner, etc.), top ETL (extraction, transformation and load) tools (e.g. Informatica) and the major front-end tools (e.g. Business Objects, Cognos, etc.).

As much as is possible I would want my metadata tool to use utilize XML (extensible markup language) as the transport mechanism for the metadata. While XML cannot directly interface with all metadata sources, it would cover a great number of them. These metadata bridges would not just bring metadata from its source and load it into the repository. These bridges would be bi-directional and allow metadata to be extracted from the metadata repository and brought back into the tool. Lastly, these metadata bridges would not just be extraction processes, but also have the ability to act as “pointers” to where the metadata is located. This distributed metadata capability is very important for a repository to have.

Error Checking & Restart

Any high quality metadata tool would have an extensive error checking capability built into the sourcing and integration layers. Metadata in a MME, like data in a data warehouse, must be of high quality or it will have little value. This error checking facility would check the metadata that it is reading and would check it for errors and then capture any statistics on the errors that the process is experiencing (meta metadata). In addition, the tool would have error levels of the metadata.

For example it would give the tool administrator the ability to configure the actions based on the error that occurred in the process. Should the metadata be:

- Flagged with an informational/error message

- Flagged as an error and then not loaded into the repository

- Flagged as a critical error and the entire metadata integration process is stopped.

Also this process would have “check points” that would allow the tool administrator to restart the process. These check points would be placed in the proper locations to ensure that the process could be restarted with the least degree of impact on the metadata itself and on its sourcing locations.

Meta Data Repository

The metadata repository component is the physical database that is persistently cataloging and storing the actual metadata. The repository, and its corresponding meta model comprise the backbone of the MME. Therefore, in listing out the optimal metadata tool’s functionality I will pay special attention to the design and implementation of the meta model.

Meta Model Design

A meta model is a physical database schema for metadata. Anytime an MME is implemented there are integration processes that must be custom built to bring metadata into the repository. Therefore, a good meta model needs to be understandable to the repository developers working with it.

As a result, the meta model should not be designed in a highly abstracted, object-oriented manner. Instead mixing classic relational modeling with structured object-oriented design is the preferable approach to designing a meta model.

On the other hand, when highly cryptic (abstracted) object-oriented design is used for the construction of the meta model, it becomes unwieldy and difficult for the IT developers to work with. The possible exception to this guideline would be if the abstracted object-oriented model has relational views built on the model that would allow for read/write/update capabilities. These views must be understandable and fully expendable.

Meta Model Implementation

The metadata repository must not be housed in a proprietary database management system. Instead, it should be stored on any of the major open relational database platforms (e.g. SQL Server, Oracle, DB2) so that standard SQL can be used with the repository.

Semantic Taxonomy

Many government agencies and large corporations’ IT departments are looking to define an enterprise level classification/definition scheme for their data. This semantic taxonomy would then provide these organizations with the ability to classify their data, in order to identify data and process redundancies in their IT environment. Therefore, the optimal metadata tool would provide the capabilities to capture maintain and publish a semantic taxonomy for the metadata in the repository.

Meta Data Management Layer

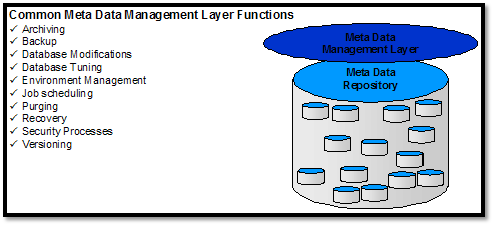

The purpose of the metadata management layer is to provide the systematic management of the metadata repository and the other MME components. This layer includes many functions, including (see Figure 1: Meta Data Management Layer):

- Archiving – of the metadata within the repository

- Backup – of the metadata on a scheduled basis

- Database Modifications – allows for the extending of the repository

- Database Tuning – is the classic tuning of the database for the meta model

- Environment Management – is the processes that allow the repository administrator to manage and migrate between the different versions/installs of the metadata repository

- Job scheduling – would manage both the event-based and trigger-based metadata integration processes

- Purging – should handle the definition of the criteria required to define the MME purging requirements

- Recovery – process would be tightly tied into the backup and archiving facilities of repository

- Security Processes – would provide the functionality to define security restrictions from an individual and group perspective

- Versioning – metadata is historical, so this tool would need to version the metadata by date/time of entry into the MME

Figure 1 – Metadata Management Layer functions

The optimal metadata tool would also have very good documentation on all of its components, processes, and functions. Interestingly enough too many of the current metadata vendors neglect to provide good documentation with their tools. If a company wants to be taken seriously in the metadata arena they must “eat their own dog food”.

Meta Data Delivery Layer

The metadata delivery layer is responsible for the delivery of the metadata from the repository to the end users and to any applications or tools that require metadata feeds to them.

Web Enabled

A java based, web-enabled, thin-client front-end has become a standard in the industry on how to present information to the end user and certainly it is the best approach for an MME. This architecture provides the greatest degree of flexibility, lower TCO (total cost of ownership) for implementation and the web browser paradigm is widely understood by most end users within an organization. This web enabled front-end would be fully and completely configurable. For example, I may want options that my users could select or I may want to put my company’s logo in the upper right hand corner of the end user screen.

Pre-built Reports

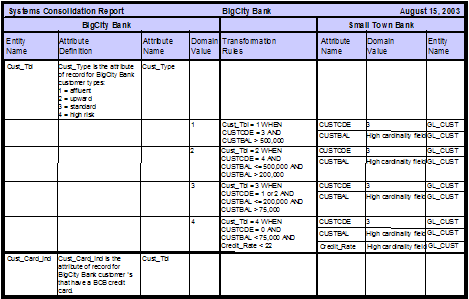

Impact analysis are technical metadata driven reports that help an IT department assess the impact of a potential change to their IT applications (see Figure 2: “Impact Analysis: Column Analysis for a Bank” for an example). Impact analysis can come in an almost infinite number of variations; certainly, the optimum metadata tool would provide dozens of these types of reports pre-built and completely configurable. In addition, the tool would be able to “push” these pre-built reports and any custom built reports to specific users or groups of users’ desktops, or even to their email address. These pushed reports could be configured to be released based on an event trigger or on a scheduled basis.

Figure 2: Impact Analysis: Column Analysis for a Bank

Website Meta Data Entry

Most enterprise metadata repositories provide their business users a web-based front-end so that the data stewards can enter metadata directly into the repository. This front-end capability would be fully integrated into the MME and it would be able to write back to the metadata repository. In addition, not only would this entry point allow metadata to be written to the repository, it would also allow for relationship constraints and drop-down boxes to be fully integrated into the end user front-end. Moreover, many of these business metadata related entry/update screens would be pre-built and fully configurable to allow the repository administrator to modify them as required. The ability to use the web front-end to write back to the repository is a feature that is lacking in many of today’s metadata tools.

Publish Graphics

The optimal metadata tool would also have the ability to publish graphics to its web front-end. The users would then be able to click on the metadata attributes within these graphics for metadata drill-down, drill-up, drill-through and drill-across. For example, a physical data model could be published to the website. As an IT developer looks at this data model they would have the ability to click on any of the columns within the physical model to look at the metadata associated with it. This is another weakness in many of the major metadata tools on the market.

Metadata Marts

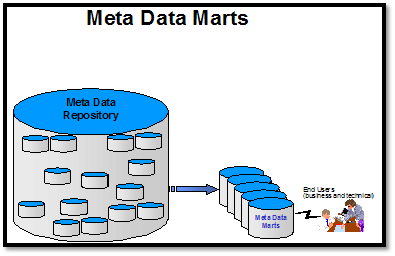

A metadata mart is a database structure, usually sourced from a metadata repository, which is designed for a homogeneous metadata user group (see Figure 3: “Metadata Marts”). “Homogeneous metadata user group” is a fancy term for a group of users with similar needs.

Figure 3: Metadata Marts

This tool would come with pre-built metadata marts for a few of the more complex and resource intensive impact analysis. In addition, we would have metadata marts for each of the significant industry standards like Common Warehouse Meta Model (CWM), Dublin Core, and ISO 11179.

Conclusion

The optimal metadata management tool is possible, as described in this article. Organizations need this product to manage their metadata effectively, to give meaning to their data and provide value to this asset. Software development organizations can use this article as the basis for developing a product that will satisfy all metadata management requirements.