Data extraction, transformation, and loading processes enable many activities in information technology projects. Understanding the concepts and practices of ETL is essential for all data and technology professionals

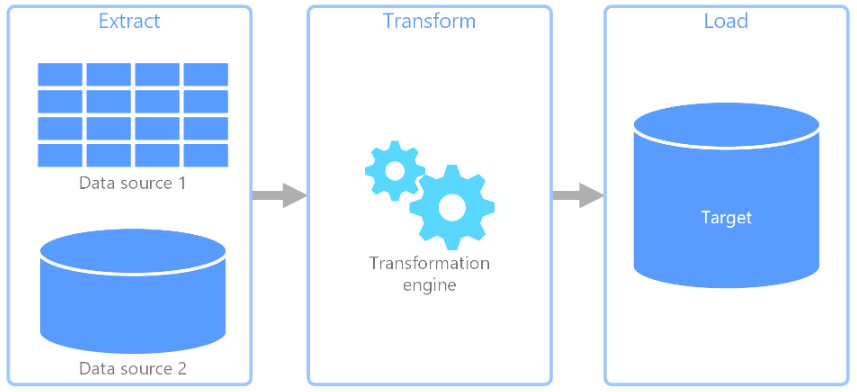

Data extract, transform, load (ETL) is a process of copying data from one or more sources into a target system which is usually designed to represent the data differently from the source(s). ETL processes are used for data warehousing, data integration, and data migration projects (Figure 1).

- Data extraction involves extracting data from homogeneous or heterogeneous sources

- Data transformation methods often clean, aggregate, de-duplicate, and in other ways, transform the data into properly defined storage formats to be queried and analyzed.

- Data loading represents the insertion of data into the final target repository, such as an operational data store, a data mart, or a data warehouse.

ETL processes commonly integrate data from multiple applications (systems and sources), perhaps developed and supported by different vendors or hosted on separate computer hardware. The separate systems containing the original data frequently are managed and operated by different teams. For example, a cost accounting system may combine data from payroll, sales, and purchasing.

There is always a need for source-to-target data mappings before ETL processes are designed and developed. Logical data maps (usually prepared in spreadsheets) describe relationships between the starting points and the ending points of an ETL system.

Figure 1: Representative ETL process

Reasons for using ETL in Data Integration, Data Migration, Data Warehousing Projects

- When used on an enterprise data warehouse DW project, the result provides deep historical and a current context of data for the organization.

- By offering a consolidated view, the result of and ETL process makes it easier for business users to analyze and report on data relevant to their enterprises.

- ETL tools and processes have evolved over many years to support new integration requirements for streaming data, big data (ex., social media, the Internet of Things (IoT), event logging), self-service data access, and more.

Steps for ETL Processes

ETL processes are composed of three separate but crucial functions often combined into a single programming tool that helps in preparing data and in the management of databases.

After the discovery and recording of source data, carefully designed ETL processes extract data from source systems, implement data quality tasks/consistency standards, conform data so that separate sources can be used together, and finally deliver data in a presentation-ready format so that application developers can build applications and end users can make decisions.

Discovering Source Data

Some or all of the source systems may have been identified during project data-modeling sessions, but this cannot be taken for granted. Normally, only the key source systems are identified during the project data-modeling phase. It is up to the ETL team to drill down further into the data requirements to determine every source system, table, and attribute required in the ETL processes. Identifying the required data sources, or systems-of-record, for each element/table is a challenge that must be solved before moving to data extracts.

Extracting Source Data

The data extract phases represent extractions from source systems to make all of it accessible for further processing. The main objective of this phase is to retrieve all the required data from the source systems with as little time and resources as possible.

Types of data extractions:

- Full Extractions – many source systems cannot identify to users which data was changed since the last extraction. Therefore a full extraction of all data is necessary each time changed data is needed from those individual sources. A full extract requires maintaining a copy of the last extract in the same format to identify changes when a later extract becomes available. The ETL team is responsible for capturing data-content changes during the incremental loads after an initial load.

- Update Extractions – when source systems can provide notifications that specific data has been changed and further identify each change, this is the easiest way to extract the data. Most data sources provide a mechanism to identify changes so that database replication can be supported.

- Incremental Extractions – Some source systems are unable to provide notification that an update has occurred, but they can identify which records were modified, and provide an extract of only those records. During subsequent ETL steps, the system needs to identify changes and propagate them down. One of the drawbacks of incremental extraction is that it may not be possible to detect deleted records in source data.

Modern data processes often include real-time data – for example, web analytics data from a large e-commerce website. For this case, data cannot be extracted and transformed in large batches: the need arises to perform ETL on streaming data. This means that as client applications write data to the data source, the data should be treated, transformed, and saved immediately to the target data store.

Transforming Data

Data extracted from sources is often the result of transactions and therefore not usable in target databases in that form. Much of such source data needs to be cleansed, deduplicated, aggregated, or otherwise transformed. This a key step where the ETL process adds value and changes data such that insightful application reports can be generated.

Data that does not require any transformation in an ETL process is referred to as direct move or pass through data.

During the transformation phase, ETL processes can perform customized operations on data. For instance, if the user wants sum-of-sales revenue, which is not in the database, it can be added. Alternatively, if the first name and the last name in a table are in different columns, it is possible to concatenate them before loading.

Transformation steps apply a set of rules to convert the data from the source to the target. This includes converting any measured data to the same dimension (i.e., conformed dimension) using the same units so that they can later be joined. Transformation steps also require joining data from several sources, generating aggregates, generating surrogate keys, sorting, deriving new calculated values, and applying advanced validation rules.

Examples of ETL Data Transformations

ETL data transformations are the process of converting data from one format or structure into another format or structure. Data transformations can be simple or complex based on the required changes to the data between the source (initial) data and the target (final) data.

Data transformations typically are performed via a mixture of manual and automated steps. Tools and technologies used for data transformation can vary widely based on the format, structure, complexity, and volume of the data being transformed.

Following are some of the most common types of data transformations:

- Aggregation – Data elements are aggregated from multiple data sources and databases

- Cleaning – Examples include changing nulls to 0 or “male” to “M” and “female” to “F”

- Data validation – Simple or complex data validation; for example, if the first three columns in a row are empty then reject the row from processing

- De-duplication – Identifying and removing duplicate records or duplicate columns

- Derivation – Applying business rules to data that derive new calculated values from existing data; for example, creating a revenue metric that subtracts taxes

- Filtering – Selecting only specific rows and columns

- Integration -Assign each unique data element one standard name with one standard definition.

- Joining – Linking data from multiple sources – for example, adding advertising spending data from across multiple platforms, such as Google Adwords and Facebook ads

- Key restructuring – Establishing key relationships across tables

- Splitting -Splitting a single column into multiple columns

- Summary – Summarize values to obtain total figures which are calculated and stored at multiple levels as business metrics – for example, adding up all purchases a customer has made to build a customer lifetime value (CLV) metric

Loading Data Stores

Two primary methods are used to load data into a warehouse:

- Full loads – entire data load to targets that takes place the first time a data source is loaded into the warehouse

- Incremental loads –loading data that has changed (“delta loads”) between source and target at regular intervals. The most recent extract date is maintained so that only records added after this date are loaded.

The ETL phase loads the data into the end target — often a data warehouse. This process varies widely from one organization to another. Some data warehouses may overwrite existing information with cumulative information; updating extracted data is can be done real-time, daily, weekly, or monthly.

Other data warehouses (or even other parts of the same data warehouse) may add new data in a historical form at regular intervals — for example, hourly. To understand this, consider a data warehouse that is required to maintain sales records of the last year. This data warehouse overwrites any data older than a year with newer data. However, the entry of data for any one year window is made historically. The timing and scope to replace or append data are strategic design choices dependent on the time available and the business needs. More complex systems can maintain a history and audit trail of all changes to the data loaded in the data warehouse.

ETL Development Tools

Designing and maintaining the ETL process is considered one of the most challenging and resource-intensive tasks on a data warehouse project. Many data warehousing projects use ETL tools to manage this process.

ETL is much easier and faster to use when compared to the traditional methods of moving data, since they require writing conventional computer programs. ETL tools contain graphical interfaces which speed up the process of mapping tables and columns between source and target databases.

ETL tools can collect, read, and migrate data from multiple data structures and across different platforms, like a mainframe, server, etc. Also, ETL technology can identify “delta” changes as they occur, which enables ETL tools to copy only changed data without the need to perform full data refreshes.

Moreover, ETL tools often include ready-to-use operations like filtering, reformatting, sorting, joining, merging, aggregation; they support transformation scheduling, version control, monitoring, and unified metadata management.

Conclusion

A common problem organizations face is how to gather data efficiently and effectively from multiple sources, in multiple formats, then copy that data to one or more data stores. The destination or target may not be the same type of data store as the source, the formats are often different, and data needs to be revised or cleaned before loading it into its final destination.

Various tools, services, and processes have been developed over the years to help address these challenges. No matter which process is used, there is a frequent need to coordinate the work and apply some level of data transformation within the data pipeline. ETL has been a successful solution for a majority of organizations.