Physical data models should follow the logical data model as closely as possible, while adding the optimizing items to conform to a specific database and platform.

Introduction

Physical data modeling involves transforming the logical model from a purely business design to a design optimized to run in a particular environment. Things that must be considered when doing physical modeling include the specific RDBMS, the hardware environment, the data access frequency and the data access paths. Physical data modeling involves adding properties, such as space, free space and indexes. It may also involve altering the logical structures. There are a variety of physical changes a database administrator may make to the logical structures. The three main reasons to deviate from the logical model are to improve performance, improve usability and save DASD.

There are many drawbacks to deviating from the logical model, so any changes should not be taken lightly. If significant improvements can be gained to support the business, consider the options.

A Database Administrator (DBA) usually creates the actual physical data model. A Data Administrator / Data Analyst (DA), the business user, and the applications developer are also involved in changing the logical data model to its physical representation. The processes can be explained through a real-life example (the names were changed to protect the guilty) of a database design. This example resulted in physical changes to improve performance, improve usability and save DASD. If the tables were physically created in a logically pure format, it would have meant many headaches for the company. It is important to examine, at a high level, the logical design then explain the physical design decisions that were made.

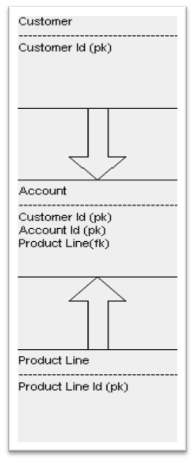

Joe’s Cheesy Collectibles enrolls people in a program where they select a line of collectibles and are sent a different cheesy collectible each month (sort of like those music and video clubs). The customer may choose from many cheesy collectible lines. The most popular lines are Elvis velvet paintings, Three Stooges picture plates, and Soap Opera statuettes. One customer may enroll in one or many cheesy collectible lines. The customer may also enroll in the same line more than once (can you ever have enough Elvis velvet paintings?). Each time the customer enrolls in a new line, he will get a new account. Our first three entities would be customer, product line and account. (see diagram 1)

Diagram 1

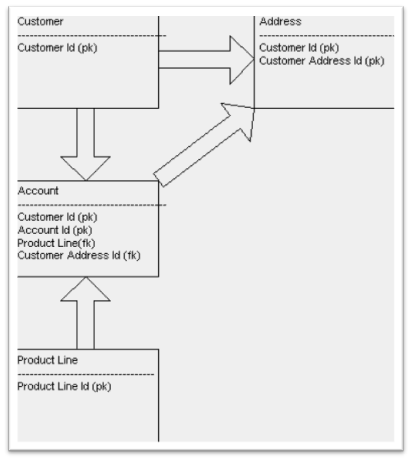

Next, we need to know where to ship the product and send the bill each month. In this case, a customer can have more than one product line so we need to store the address at the customer level not the account level. This will enable us to share the address across multiple customer accounts. To complicate things, we also allow customers to use more than one address. Maybe Tom Smith wants the Elvis Velvet Paintings sent to his house but wants to hide his obsession with soap opera statuettes from his wife so he has those items sent to his office. We will then need to store the address at the customer level but point the account table to the correct address. We could do this by adding an address id to the account table. (see diagram 2)

Diagram 2

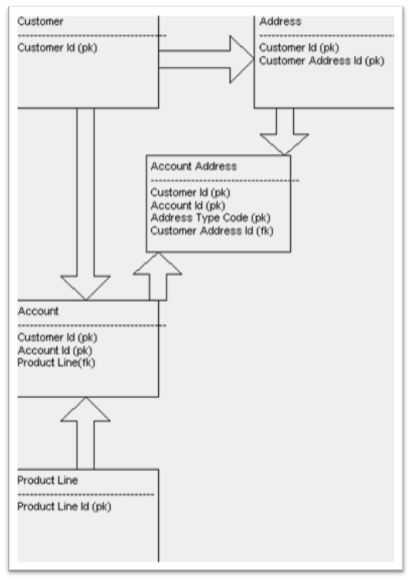

Let’s complicate things a little further (remember this is based on a real life example). We also need to allow a customer to send the shipments to one of his addresses and the bill’s to another. This will require a junction table between account and address. Using a junction table will enable: 1) a customer to have many addresses; 2) a customer to have many accounts; 3) an account to use many of the customers’ addresses.

In reality, the account may use two of the customer’s addresses. In the junction table we will add an address use code. The valid values would be 1=billing, 2=shipping. (see diagram 3)

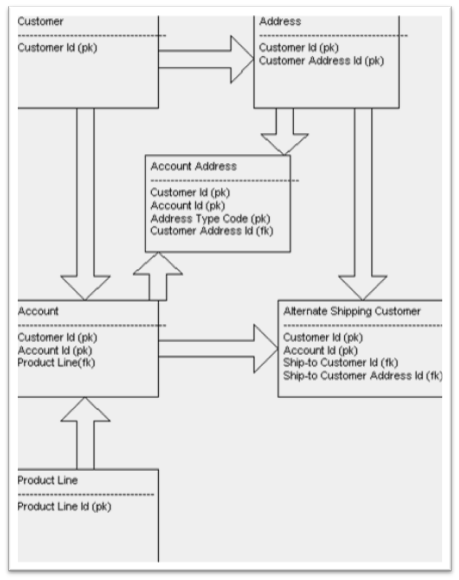

Diagram 3

One more complication: Customer Tom wants to send the Three Stooges picture plates to his Grandmother but have the bills sent to him. Doing this would require us to establish Grandma as a customer and point the shipments to her. However, we can’t use the junction between account and address because that would point us to the wrong customer ID. Therefore, we need to create an optional alternate customer table. We would only need to insert to this table if the shipments are going to a different customer then the bills. This table must contain the account number, Grandma’s customer ID and Grandma’s address pointer. (see diagram 4)

Diagram 4

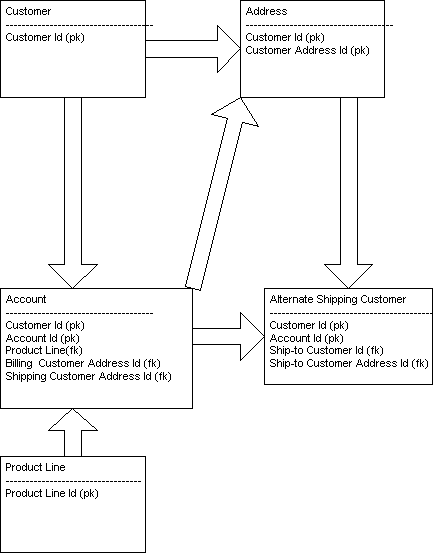

Pretty complicated to ship Elvis velvet paintings, isn’t it? That is what we thought. Even if performance was not an issue, the complications involved in maintaining and accessing these tables was not acceptable. We agreed that diagram 4 represented our logical needs but we needed to create something more usable physically. The most obvious change was getting rid of the junction between account and address. We only have two usages of address (billing and shipping). Therefore, we decided to add two columns to the account table in the physical data model: bill address ID and ship address ID. If the customer was sending the bills and the shipments to the same address they could contain the same ID. (see diagram 5)

The biggest drawback of this design is that it limits the number of alternate customers to one and the number of addresses for an account to two. We discussed this with the business and agreed that it would be impractical to have more than one customer at a time designated as the recipient of the shipments.

Conclusion

To summarize, compare diagram 6 with diagram 4.We managed to improve performance, improve usability, and reduce DASD. While we did violate some pure modeling rules we provided a faster, cheaper, and more usable database to the business. It was important to go through this entire process when performing logical to physical data model transformation. If you jump right to the physical model without truly understanding the logical model, it becomes easy to make mistakes and incorrect assumptions. Keep in mind the words of Albert Einstein, “Everything should be made as simple as possible, but not simpler.”