Data warehousing and business intelligence users need trustworthy data. How to ensure that? Perform three types of testing to confirm data integrity.

In the Gartner IT Glossary, data integrity and data integrity testing are defined as follows:

- Data Integrity: the quality of the data residing in data repositories and database objects. The measurement which users consider when analyzing the value and reliability of the data.

- Data Integrity Testing: verification that moved, copied, derived, and converted data is accurate and functions correctly within a single subsystem or application.

Data integrity processes should not only help confirm a project’s data integrity, but also improve and maintain the accuracy and consistency of data over its lifecycle. This includes data management best practices such as preventing data from being altered each time it is copied or moved.

Processes should be established to always maintain Data Warehouse and Business Intelligence (DWH/BI) data integrity. Data, in its production state, is the driving force behind effective decision making. Errors with data integrity commonly arise from human error, noncompliant operating procedures, errors in data transfers, software defects, compromised hardware, and physical compromise to devices.

Data Warehouse and Business Intelligence (DWH/BI) initiatives need “data integrity testing”—testing processes that support:

- All data warehouse sources and target schemas

- ETL processes

- Business intelligence components/front-end applications

There are other categories of DWH/BI and ETL testing, such as functional, performance, security, scalability, system, and integration testing, end-to-end, etc.

Classifications of Data Integrity for DWH/BI Systems

Data Integrity is an umbrella term that refers to the consistency, accuracy, and correctness of data stored in a database. There are three primary types of data integrity:

- Entity Integrity ensures that each row in a table (for example) is uniquely identified and without duplication. Entity integrity is often enforced by placing primary key and foreign key constraints on specific columns. Testing may be achieved by defining duplicate or the null values in test.

- Domain Integrity requires that each set of data values/columns falls within a specific permissible defined range. Examples of domain integrity are correct data type, format, and data length; values must fall within the range defined for the system; null status; and permitted size values. Testing may be accomplished, in part, using null, default and invalid values.

- Referential Integrity is concerned with keeping the relationships between tables Referential integrity is often enforced with Primary Key (PK) and Foreign Key (FK) relationships. It may be tested, for example, by deleting parent rows or the child rows in tables.

Verifying Data Integrity in Schemas, ETL Processes, and BI Reports

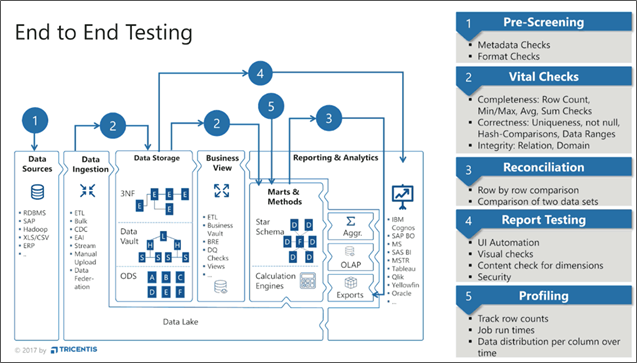

The framework in Figure 1 illustrates the major DWH/BI components that are generally tested in all categories of end-to-end DWH/BI testing. Data integrity testing often requires considerable time and resources.

The following DWH/BI components are presented in the testing framework:

Verifying data source/target requirements and schema designs

Requirements and schema-level tests confirm to what extent the design of each data component matches the targeted business requirements.

This process should include the ability to verify:

- Business and technical requirements for all source and target data

- Data integrity specifications technically implemented (DBMS, file systems, text files, etc.)

- Data models for each implemented data schema

- Source to target data mappings data loaded into DWH targets. Examples of sources and associated targets include source data that are loaded to staging targets as well as staging data that are loaded to data warehouse or data mart targets

Schema quality represents the ability of a schema to adequately and efficiently project ‘information/data’. Schema in this definition refers to the schema of the data warehouse, whether it is a conceptual, logical, or physical schema, star, constellation, or normalized schema. However, this definition is extended here to include the schemas of all data storages used in the whole data warehouse system including the data sourcing, staging, the operational data store, and the data marts. It is beneficial to assess the schema quality in the design phase of the data warehouse.

Detecting, analyzing, and correcting schema deficiencies will boost the quality of the DWH/BI system. Schema quality could be viewed from various dimensions, namely schema correctness, schema completeness, schema conformity, schema integrity, interpretability, tractability, understandability, and concise representation.

Testing source and target data integrity

A properly designed ETL system:

- Extracts data from source systems

- Enforces data quality and consistency standards

- Conforms data so that data sources can be used collectively

- Delivers data in a format that enables application developers to build applications

Data warehouse integrity testing and evaluations focus on ETL processes. Various functional and non-functional testing methods are applied to test the ETL process logic. The goals are to verify that valid and invalid conditions are correctly processed for all source and target data, ensuring primary and foreign key integrity (i.e., referential integrity), the correctness of data transformations, data cleansing, and application of business rules.

Testing BI report data integrity

BI applications provide an interface that helps users interact with the back-end data. The design of these reports is critical for understanding and planning the data integrity tests.

Complex business rules are often applied to original source data through data governance then loaded to the data warehouse. The data warehouse information is then delivered in the form of BI dashboards/reports and is available for business executives to make crucial decisions with confidence. It is therefore essential to assure that data warehouse data (which is further processed in BI applications) is accurate. Those conducting BI application tests must prepare to answer the business teams’ questions: “Do these reports reflect the DW numbers correctly? If so, or if not so, how can we make sure we are looking at the right data to make the right decisions”?

The traditional approach for BI report testing has several flaws. Often, much time is needed to identify and fix issues discovered in BI reports. The numbers on BI dashboards are often aggregated to a high level from the lower granularity of the DW. And this raw data is often transformed at each iteration through its journey to the dashboards. Choosing any value from a BI dashboard, then working to reconcile it against data warehouse data tables, is a complicated and tedious process.

A Data Integrity Testing Framework and a Checklist

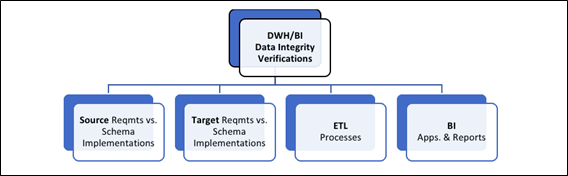

DWH/BI data integrity verification is categorized as a set of processes. Figure 2 shows a verification classification framework for the techniques applicable to sources and targets in data warehouse, ETL process, and BI report applications.

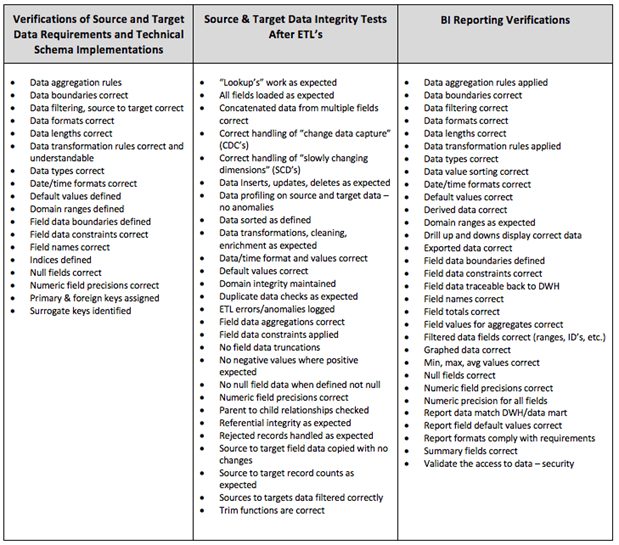

The “what”, “when” and “where” of DWH/BI data integration testing is represented in Figure 3.

- Column headings represent when and where data-related testing will take place

- Rows represent “what” data-related items should be considered for testing

Conclusion

- Data in its final state is the driving force behind organizational decision-making.

- Raw data is often changed and processed to reach a usable format for BI reports. Data integrity practices ensure that this DWH/BI information is attributable and accurate.

- Data can easily become compromised if proper measures are not taken to verify it as it moves from each environment to become available to DWH/BI projects. Errors with data integrity commonly arise through human errors, noncompliant operating procedures, data transfers, software defects, and compromised hardware.

- By applying the strategies introduced in this blog, organizations should be able to improve quality and reduce time and costs when developing a DWH/BI project.