Data quality is a function of data’s fitness for use to a particular purpose in a given context; measured against stated requirements or guidelines. High data quality engenders trust in operational and strategic decisions.

Data quality is a perception or an assessment of data’s fitness to serve its purpose in a given context (Thomas C. Redman, 2008). It is an essential characteristic that determines the credibility of data for decision-making and operational effectiveness. Data governance is the main vehicle by which high quality data can be delivered in an organization, through the development and implementation of policies, standards, processes, and practices that instill a desire to achieve and sustain high quality data across the enterprise.

As part of enterprise data management, Data Quality Management (DQM) is a critical support process in organizational change management. Data Quality Management is a continuous process for defining the parameters for specifying acceptable levels of data quality to meet business needs, and for ensuring that data quality meets these levels.

DQM involves analyzing the quality of data, identifying data anomalies, and defining business requirements and corresponding business rules for asserting the required data quality. It institutes inspection and control processes to monitor conformance with defined data quality rules, develops processes for data parsing, standardization, cleansing, and consolidation, when necessary. Additionally, DQM incorporates issues tracking to monitor compliance with defined data quality Service Level Agreements.

Data Quality Characteristics

Aspects (also known as dimensions) of data quality include:

- Accuracy – The extent to which the data are free of identifiable errors.

- Completeness – All required data items are included. Ensures that the entire scope of the data is collected with intentional limitations documented

- Timeliness – Concept of data quality that involves whether the data is up-to-date and available within a useful time period

- Relevance – The extent to which data are useful for the purposes for which they were collected

- Consistency across data sources – The extent to which data are reliable and the same across applications.

- Reliability – Data definitions are important to data quality. Data users must understand what the data mean and represent when they are using the data. Each data element should have a precise meaning or significance.

- Appropriate presentation – Data is presented in a manner and format that is consistent with its intended use and audience expectations

- Accessibility – Data items that are easily obtainable and legal to access with strong protections and controls built into the process

Blended and new data sources, master data management efforts, and data integration initiatives can require the need for data quality management. All these projects have a common goal of improved data quality for organizational use, and the data quality process should result in continuing enhancement of each of the data quality characteristics for the data that is cleansed.

A rigorous data quality program is necessary to provide a viable solution to improved data quality and integrity. Approaching data quality with numerous targeted solutions that address single issues (clean one file for accuracy, then clean another file for accuracy, clean the first file for completeness, clean a third file for accuracy, start a new cleansing effort at another business unit, etc..) wastes business and technical resources and never addresses the root causes of any of the organization’s data quality and data management challenges.

The general approach to data quality management is a version of the Deming cycle (W. Edwards Deming). When applied to data quality within the constraints of defined data quality metrics, it involves:

- Planning for the assessment of the current state and identification of key metrics for measuring data quality

- Deploying processes for measuring and improving the quality of data

- Monitoring and measuring the levels in relation to the defined business expectations

- Acting to resolve any identified issues to improve data quality and better meet business expectations

All these activities require the services of a strong, sustained enterprise data management program, starting with data governance. This data management initiative should define the data quality parameters, identify the data quality metrics for each critical data element, work with the data quality professionals to ensure that data quality is part of the data management process and act to resolve any issues that result from poor or inconsistent data governance processes or metadata standards, so that higher data quality can be achieved.

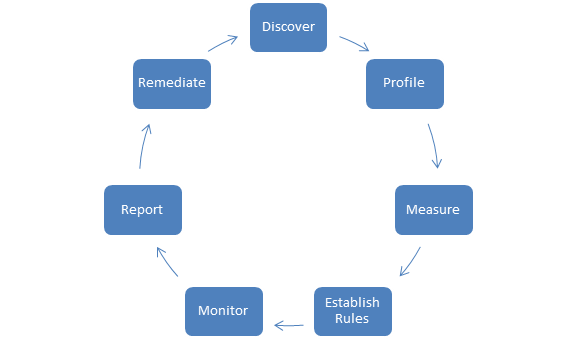

Data Quality Process

A repeatable process for managing the steps of establishing and sustaining data quality in any organization should follow these components, based on work from a variety of quality experts (e.g., W.E. Deming, P. Crosby, L. English, T.C. Redman, D. Loshin).

- Data Discovery: The process of finding, gathering, organizing and reporting results about the organization’s critical data from a variety of sources (e.g., files/tables, record/row definitions, field/column definitions, keys)

- Data Profiling: The process of analyzing identified data in detail, comparing the data and its actual metadata to what should be stored, calculating data quality statistics and reporting the measures of quality for the data at a specific point in time

- Data Quality Rules: Based on the business requirements for each Data Quality measure/dimension, creating and refining the business and technical rules that the data must adhere to so it can be considered of sufficient quality for its intended purpose

- Data Quality Monitoring: The continued monitoring of Data Quality, based on the results of executing the Data Quality rules, and the comparison of those results to defined error thresholds, the creation and storage of Data Quality exceptions and the generation of appropriate notifications

- Data Quality Reporting: Creating, delivering and refining reports, dashboards and scorecards used to identify trends against stated Data Quality measures and to examine detailed Data Quality exceptions

- Data Remediation: The continued correction of Data Quality exceptions and issues as they are reported to appropriate staff members

Benefits of Data Quality Management

Many organizations struggle when attempting to identify the benefits of launching a data quality management program since they have not spent time evaluating and quantifying the costs of poor data quality. Most data quality benefits can be attributed to cost reduction, productivity improvement, revenue enhancement, or other financial measurement.

In a 2011 report, Gartner showed that 40% of the anticipated value of all business initiatives was never achieved. Poor data quality in both the planning and execution phases of these initiatives was a primary cause. In addition, poor data quality was shown to affect operational efficiency, risk mitigation, and agility by compromising the decisions made in each of these areas. Without attention to data quality and its management, many organizations incur unnecessary costs, suffer from lost revenue opportunities and experience reduced performance capabilities.

Conclusion

When included as part of enterprise data management, and supported by an enterprise data governance program, with an active metadata management effort and performed according to the practices recommended by all data quality experts, Data Quality Management can provide any organization with lasting benefits. The most important benefit it can offer is data that is “fit for its proper purpose.”