Using a specialized data validity dimension in a data warehouse design can support data quality and analytics capabilities.

Introduction

While working on an Enterprise Data Warehouse for a state court system the issue of poor data quality in the source systems became apparent. Referential integrity was not strictly enforced and there was very little in the way of attribute level constraints. One normally expects that these types of constraints would be enforced for an OLTP application, whether through the application, in the database, or both.

Of course, one should never be surprised when there is poor data quality in the source systems – poor data quality is the norm rather than the exception. According to The Data Warehouse Institute (TDWI) over $600 billion a year is lost due to poor data quality.

Data Validity

One type of data quality error this EWSolutions project experienced was found in the date values, where the date value was a real date, e.g. 10/15/2205, but was obviously invalid per the business context. The team classified these types of data quality errors as data validity errors.

The primary focus for the initial dependent dimensional data mart was to enable analysis of court performance – because of this we could not avoid populating records with data validity errors and act as if a court case simply did not exist. Due to the anticipated cost of fixing the source system application it was determined that these data validity errors would be accepted they came into the Data Warehouse. Some measures in a fact record might not be accurate because of the data validity errors but other measures were still accurate and useful. Training in data quality, metadata management, and other areas of data management can support improved data quality activities.

There were multiple date related fact table measures such as Number of Days between Filing and Hearing, Number of Days between Hearing and Sentencing, etc. Due to the data validity errors, it was possible for exceptions such as a hearing date to appear to be before a filing date, a sentencing date to occur before a hearing date and for dates to be set unreasonably into the future or past, etc.

There were concerns that these data validity exceptions would skew the results of OLAP analysis and result in a loss of confidence in the Data Warehouse as in Figure 1.

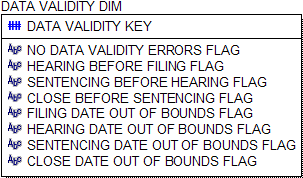

Figure 1: Example chart with data skew due to invalid data

In Figure 1, Court 5 has data skew because there are records with unreasonable hearing date values.

Data Validity Dimension

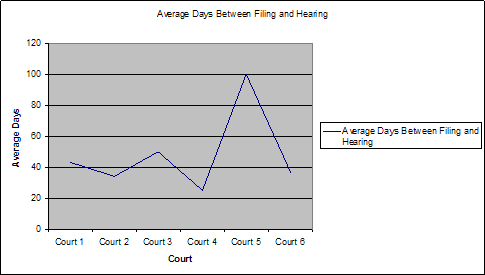

To address these data validity issues a new dimension was created, based on the work of Larry Rossi – the Data Validity dimension – consisting of a numeric surrogate key and a series of data quality flag attributes with a domain of “Y” or “N.” The Data Validity dimension serves three (3) purposes:

- For filtering out records with a particular type of data validity error

- For result set value comparison – with and without data validity errors

- For flagging court cases with data validity errors for upstream correction

Figure 2: Data Validity Dimension

Populating the Data Validity dimension

To populate the non-key attributes in the dimension, a Cartesian product of all the possible combinations of data validity errors is generated using SQL. Normally Cartesian products are avoided like the plague when writing SQL, but this is one case where it is useful. One way to create the Cartesian product is to create a tables (call it dummy_cartesian) with one column (call it “a”) and two rows – one populated with a “Y” and the other with an “N.” Then use an alias to reference this table for as many non-key attributes as required. In this case there are 7 non-key attributes for which we need a Cartesian product – the “NO DATA VALIDITY ERRORS FLAG” is a special case which we will discuss later.

For example,

select a.a, b.a, c.a, d.a, e.a, f.a, g.a

from dummy_cartesian a, dummy_cartesian b,dummy_cartesian c,

dummy_cartesian d, dummy_cartesian e, dummy_cartesian f,

dummy_cartesian g

Notice that there is no “where” clause in the SQL – this is what generates the Cartesian product of every possible combination of attribute values. The result will be 64 rows or 27 – as there are 2 rows in the dummy_cartesian table. Use whatever method is appropriate for your environment to generate the surrogate key values.

The first non-key attribute in Figure 2 called “NO DATA VALIDITY ERRORS FLAG”. This attribute is used to eliminate the need to test each attribute for a value when you only want to query for records without data validity errors. It should be set to “Y” only if all the remaining non-key attributes are set to “N.” Doing this will require an extra processing step to populate the table.

High Quality Analytics

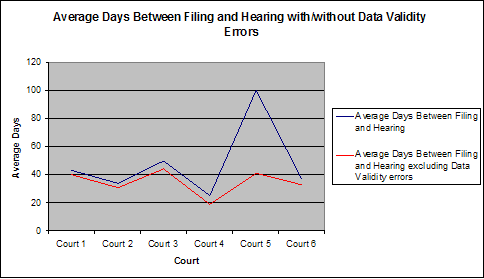

With the Data Validity dimension in place and references to the surrogate key populated in the fact tables, higher quality analytics were enabled thus giving the users greater confidence in the data as the following chart demonstrates.

Figure 3: Example chart showing values with and without Data Validity errors

The Data Validity dimension explains unexpected variances and reports on data validity errors forwarded to the appropriate court manager for correction and for educating data entry clerks. The chart in Figure 3 now shows why Court 5 had such a high number of Average Days between Filing and Hearing.

Conclusion

Lack of confidence in the data in a Data Warehouse environment is a leading cause of failure when the users do not judge a Data Warehouse successful. Ideally, data validity errors are corrected upstream before being loaded into the Data Warehouse, but if you encounter a situation where you have to accept data validity errors, the Data Validity dimension can prove to be invaluable in explaining problems with the data and additionally raise awareness in the organization of the impact of poor data quality.