Introduction

In the beginning were applications. And applications served the corporation well until there was a desire for integration of historical information. However, applications supported neither integration nor historical data. When it was noticed that applications – once built and put into production – were unable to be reshaped, the limitations of trying to get information out of applications became obvious. In a word, applications did not and could not support the organizational desire for corporate and analytical information.

Out of the swamp created by applications came the notion that there should be a split between transaction/operational processing and informational processing. In doing so, one environment could support the clerical needs of the corporation and another environment could support the management needs of the corporation. Thus born was the concept of the data warehouse. The applications of yesterday were divided into transaction processing applications and decision support processing. The informational processing centered on a structure called a data warehouse. The data warehouse represented the opportunity for the corporation to create integrated data and to collect historical data.

Enter the Data Warehouse

The data warehouse contained integrated, very granular, historical detailed data. The granularity of data found in the data warehouse allowed data to be reshaped in many different ways, thus providing a foundation of flexibility for all sorts of processing. In addition, data warehousing made data accessible, as opposed to having data tied up in old applications where the data was not easy to access.

The first big step in the evolution of information systems came then when it was recognized that applications simply were not an adequate foundation for the information needs of the organization.

The Evolution Continues

But the evolution of information systems was just starting when the data warehouse concept was conceived and popularized. As powerful as the concept of the data warehouse was, it was not complete. There were informational needs that could not be satisfied by the data warehouse itself. One of those needs was for high performance OLTP processing in the informational environment. Soon a structure appeared which accommodated this need. That structure was called an operational data store, or an “ODS”. The ODS is a hybrid structure that has some characteristics of the data warehouse and other characteristics of the operational environment. The ODS provides the capability for transaction processing and update directly into the ODS. But the ODS also provides the capability of operating on integrated data.

In order to build the data warehouse, there is the messy job of going back into the operational environment and gathering, converting, and cleansing old application data. Application data in almost all cases was never designed to be integrated with anything else. Then when the data warehouse appeared there indeed was the need for retro integration of application data. The result was the creation of a dirty complex task – the bringing over of application data into an integrated cleansed environment, and the creation of a data management function. The task of integrating old application data from many application systems is a daunting and complex task. In the best of circumstances, it is not easy and in the worst of circumstances it is almost impossible. But the data warehouse architect was forced to roll up his/her sleeves and accomplish the task if the benefits of data warehouse were to be realized.

Almost overnight a software industry sprang up that was called “ETL” (extract/transform/load). In some places this ETL software was also called “i/t” (integration/transformation) software. The ETL/i/t software allowed the data to be integrated into the data warehouse environment in an automated manner. Prior to the ETL/i/t software products there was no way to populate the data warehouse except by hand code. A programmer wrote C or Cobol code and pulled data from the application environment to the data warehouse environment manually. But with ETL, in short order the data warehouse could be populated automatically, which emancipated the programmers of the world from a tedious and thankless job.

Another important feature of the evolution of the data warehouse was that of data marts. Different departments needed to see data differently for their informational processing. The very granular data found in the data warehouse was gathered and reshaped into a form recognizable to a department. Typically the departments that built data marts were accounting, marketing, sales, and finance. The data marts featured multi dimensional data bases (OLAP databases). Star joins and fact tables were found in the data marts as a result of all of the analysis done by data base designers working in the departmental multi dimensional, OLAP mode. Data marts were designed around the requirements of the department’s informational needs.

Yet another feature of the evolving data warehouse environment was that of an exploration facility. The exploration facility was a feature that allowed heavy statistical analysis to be done on data found in the warehouse. In the early days of the data warehouse, heavy statistical analysis was done directly on the data found in the warehouse. But upon any amount of demand for exploration and data mining, a separate processing facility was necessary. This facility was called an exploration warehouse or a data mining warehouse. The exploration facility allowed the analysts who look for hidden business patterns to have their own place to work where they did not interrupt the proceedings of other people who needed to use the data warehouse.

Still another component of the data warehouse was the extension of data into overflow storage. Data warehouses (at this time called enterprise data warehouses, or “EDW”) started to contain more data than conventional technology would easily and efficiently accommodate. The overflow, dormant data that accumulated in a data warehouse as the warehouse grew larger flowed into a facility called “near line storage”. Near line storage was much cheaper than disk storage. Given that large amounts of data went dormant and given that there was no need for update in a data warehouse, near line storage fit very nicely the need for the extension of the data warehouse into overflow storage.

The framework that data warehouse evolved into was called the “Corporate Information Factory.”

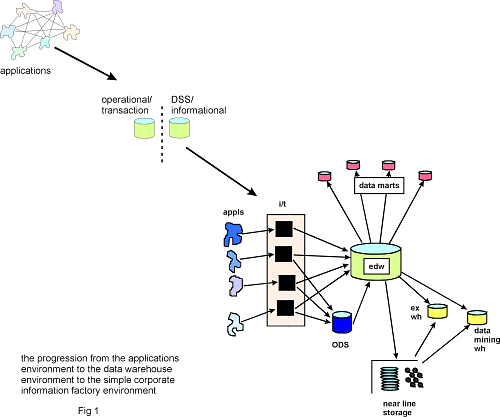

Fig 1 shows the evolution from applications into the corporate information factory.

The evolution to the simple version of the corporate information factory provided the corporation with several very important capabilities

- the creation of a granular pool of data which provided:

- a flexible means of displaying data to many different organizations in many different ways without having to go back and pull the data from application systems each time there was a need for looking at the data differently,

- a foundation where new analyses could immediately proceed into analysis, rather than have to go back and pull data from old applications,

- a historical foundation of data where the detailed history of transactions was available,

- the ability to do high performance OLTP processing on decision support data through an ODS,

- the ability to accommodate the many individual needs of different departments through data marts,

- the ability to accommodate an almost infinite amount of data through the use of near line storage, and

- the ability to accommodate huge amounts of heavy statistical processing by means of an exploration/data mining data warehouse.

In a word, the informational needs of the corporation were being met for the first time.

But the evolution of the corporate information factory did not stop. The evolution continues today. The forces of technology continue to shape the corporate information factory.

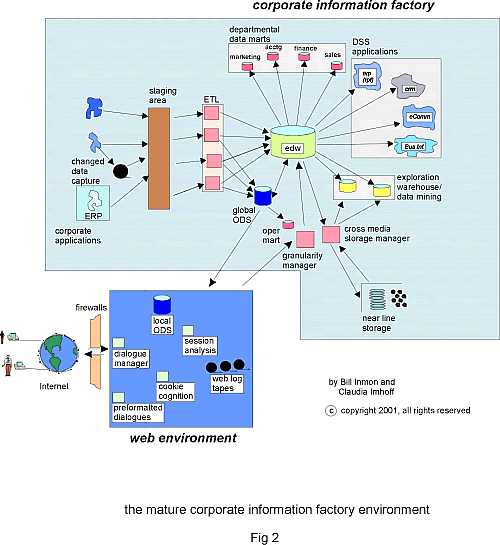

Some of the new architectural components that are finding their way into the corporate information factory are:

- the web environment,

- the DSS application environment where important processing such as CRM and Business Intelligence occur,

- a staging area for large corporations that need to stage data prior to ETL processing,

- ERP processing,

- granularity management,

- operational marts, where data in the ODS can be accessed and analyzed,

- cross-media storage management, and so forth.

One of the interesting aspects of the corporate information factory is that it evolves either in a proactive mode or a reactive mode. Corporations that embrace and understand the corporate information factory move their organizations gracefully and with ease. These forward thinking corporations operate in a proactive mode. Corporations that do not understand or reject the corporate information factory evolve their information architectures as a result of pain and waste. When the pain and waste become large enough the evolution to the corporate information factory continues. These corporations operate in a reactive more.

Years ago there was a commercial on television for Fram oil filters. In the commercial the mechanic turns to you and says – “You can pay me now or you can pay me later. You can add one of these new filters for $10.00 right now or you can ignore the need for a new oil filter and I will replace the engine for you a year from now for $5,000. It’s your choice.” The same can be said for the corporate information factory. You can move your organization down the path of evolution to the corporate information factory in a peaceful progressive manner or you can ignore it and be forced to evolve at a later point in time. It’s your choice. Do you want to be proactive or reactive?

The corporate information factory continues to grow and evolve. Undoubtedly the corporate information factory will evolve beyond the current known boundaries. And with each new expansion, the information processing capabilities grow.

Fig 2 shows a mature rendition of the evolution of the corporate information factory.

Conclusion

Throughout the evolution of the corporate information factory, there is the accompanying evolution of metadata. Metadata was valuable before there ever was the corporate information factory. But with the advent of the corporate information factory there suddenly is a mandate for metadata. The corporate information factory entails many different architectural components. For the corporate information factory to operate properly the different architectural components need to work in conjunction with each other. The glue that holds the corporate information together then is metadata. Metadata allows one architectural component to communicate with another architectural component. For this reason alone (and there are plenty more) metadata plays an enhanced role in the corporate information factory.

The type of metadata that is germane to the corporate information factory is distributed metadata. No longer is a large central repository of metadata required or needed in the face of the need for exchange of metadata around an entire architecture.