Metadata-based extraction, transformation, and loading (ETL) can support a new approach to any organization’s data integration and development practices

Introduction

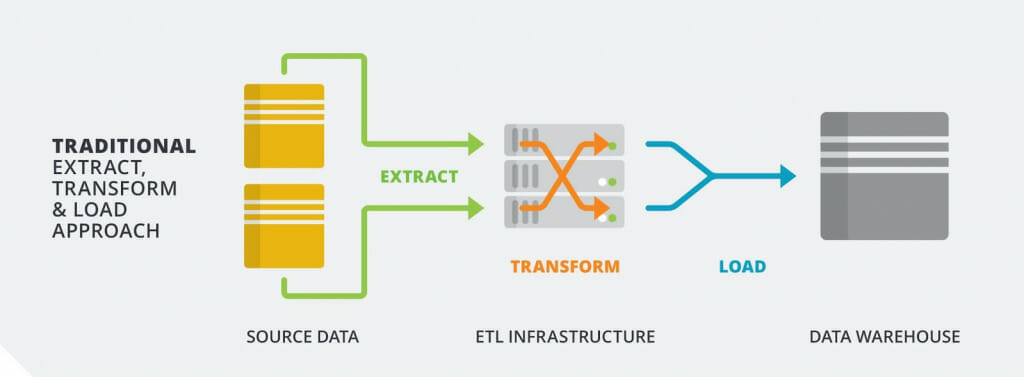

Traditional extraction, transformation and loading (ETL) software is a computer programming tool that enables a developer to create custom ETL code. Custom coding is a management challenge with expensive overhead and complex coordination between the business, application, and data teams. In addition, the code can be unreliable because it is hard-coded and tightly binds to database schema, so any environmental changes causes the program to fail. This situation can become more problematic if the data architecture incorporates NoSQL databases with dynamic, hierarchical schemas.

Metadata-based extraction, transformation, and loading (ETL) can support a new approach to any organization’s data integration and development practices

What if data integration is not a programming exercise at all? Data integration is a data issue that should be resolved with a data methodology. The growing challenge facing many organizations’ data architecture and integration demands a flexible data architecture that can require an equally flexible ETL-based data integration solution. Metadata ETL provides this flexibility by managing schema, the data model, and ETL together in a holistic solution.

Metadata-based ETL Concepts

Metadata based ETL implements the Declarative Programming Paradigm which differs from Object Oriented and Procedural Programming. Declarative separates “what should done” from the “how to do it”. The “what should be done” is defined in metadata, similar to a data dictionary which defines the data mappings, data model, datatypes, data transformations, etc. The “how to do it” is implemented in pre-built in code that uses encapsulation of ETL functionality.

A declarative metadata solution is pointless if the metadata is as complex as the coding it is replacing. Fortunately, ETL metadata has the balance of minimalism and simplicity while meeting business and technical requirements. The metadata can be represented in a data dictionary style format that is easy to read and understand. It reflects the format of ETL technical requirements documents.

Declarative Metadata ETL

Declarative metadata ETL has the ability to be responsive, in real-time, to source schema changes from either SQL or NoSQL databases. These changes are detected and added to the data dictionary. Optionally, DDL of the schema change can be applied to an SQL destination based on the rules found in the data model. This feature displays another important concept of Metadata ETL, loose binding to schema. Most ETL vendors have strict binding, meaning any schema change at the source or destination will cause the package to fail. Metadata ETL will rarely fail from a schema’s changes.

Metadata ETL typically uses JavaScript Object Notation (Json) documents for internal processing which means they support hierarchical data and are ideal for SQL and NoSQL integration. The responsive, rules-based ETL allows automated conversion of NoSQL to SQL that normalizes hierarchical data to a tabular format. Use cases for this method include NoSQL to a data warehouse/reporting database. Metadata ETL is agnostic to the database format used, such as SQL or NoSQL, or a hybrid of the two.

Another value proposition for metadata ETL is the bulk/ad hoc manipulation of the metadata. The entire database schema and the data model can be validated and manipulated more effectively. Simple data comparison techniques can quickly identify changes between environments or systems. ETL changes can be implemented easily with the capability to search metadata and can be edited without need to traverse ETL code manually.

Metadata-based ETL Use Case Example

Reviewing an early adopter of metadata ETL can offer some real-world examples of the value of this approach. Equator is a software as a services (SaaS) provider to the mortgage banking industry. Their clients include 3 of the 4 largest banks in the US. Business operations require feeding complex data in a nightly batch mode to their clients. The OLTP source systems are a hybrid of relational and semi-structured data populating multiple normalized ODS style databases. They have approximately one million data attributes in 5,000 tables under ETL management across all environments and clients.

Client data integration is a major customer requirement for Equator. In a SaaS business model, integrating data back to the clients as painlessly as possible drives customer acquisition and retention. Most of Equator clients’ ETL teams prefer the data to be normalized from the semi-structured OLTP data. Equator struggled with a traditional ETL tool which included an extensive amount of SQL coding to implement basic metadata-controlled ETL. It was extremely brittle and the run time required at least 6 hours. They adopted a true Java based metadata ETL engine that dramatically improved operations, where run times were reduced to one hour. An entire database server was eliminated, managing ETL business requirements and coordination with client ETL teams was improved, while the need for a large staff was reduced. Currently, ETL operations are managed with one ETL DBA and 1.5 ETL developers. This small ETL team supports change management and production support for a software company with 155 employees.

Additional aspects of metadata-based ETL could include streamlining the integration between application development, OLTP schema management and data integration. These activities could be accomplished by application, database and ETL teams sharing a central repository of data identities. This concept is based on a master data management approach for schema metadata. Enabling this capability will remove the last major challenge of application data entry, the need for consistency in expected data values with ETL, since the OLTP database cannot not enforce data types on semi-structured data.

Conclusion

Metadata ETL is a radical approach to data integration. By exploiting the use of a data dictionary, metadata-based ETL automates real-time schema management and accelerates new ETL development through the importation of existing schema or data model metadata, with easy integration of SQL and NoSQL environments. These beneficial capabilities are accomplished without needing to manage custom-written ETL code.