Every organization should develop and implement a plan to assess current data quality and take clear steps to improve and maintain it

High-quality data quality gives an organization a complete, accurate picture of its business and the data it uses, supporting more informed decisions and improved operations that can save time and money. High-quality data can support all operations and decisions, strategic as well as tactical.

According to a data quality report from a leading research organization, just 20 percent of organizations have a centralized data quality program. This means most companies have a variety of different, departmental, and disconnected strategies, resulting in less effective data quality efforts and a high level of poorer quality data – or they may have no data quality program at all. Poor data quality has been estimated to cost US businesses more than $6 billion annually.

Every organization can benefit by having a team devoted to the centralized management of its data assets. Research shows that companies which manage their data quality within an enterprise data management program have enjoyed a significant increase in profits and have reduced costs for operations. Organizations that take a decentralized approach to data management, data governance, and data quality are usually much less effective and hampered in their ability to use data as an asset.

A focused team can develop and implement a centralized, consistent approach to improved data quality, supported by effective data governance and metadata management. A data quality team should be part of a data governance program and have metadata management professionals in support. These specialists should be aligned with business data stewards, technology professionals, analytics professionals, data scientists, etc., who can help enforce policies and promote the use of high-quality data to drive operations and insights across the organization.

Every organization should develop a clear, enterprise plan for identifying data quality objectives to ensure that data is accurate and current, and appropriate for its intended use, and should avoid establishing separate, business-area focused data quality and data management programs. Following these steps can help any organization improve and sustain its data quality:

1. Develop business objectives for data quality

Data quality means something different across different organizations. For some, it’s ensuring that customer contact data is accurate, so all orders are received as placed, and payments made smoothly. For others, it could be based on complete product inventory information to support sales and enable replenishment requirements. Since many organizations discover several business objectives for improving data quality, different lines of business using the same data may have different standards and therefore different expectations for the data quality improvement program. Ultimately, data quality is about that data being fit for a desired purpose.

How to determine the organization’s business objectives for data quality? Consider the following factors:

- The organization’s business goals

- How the organization measures progress towards goals (informal, formal, no measurement)

- What data is collected (and reasons/definitions/usage) and where it is stored

- How this data will be used and analyzed for data quality

- What characteristics of data quality are most important to the organization and reasons for the choice

2. Assess the current data state

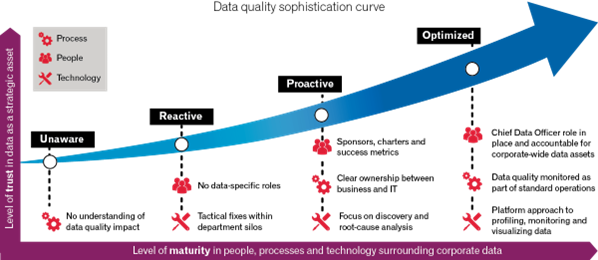

Before implementing any data quality improvement plan, understand the current state, and determine the current situation for any data management efforts. This will help identify the next steps in the process as well as inform the organization about its strengths and challenges concerning data and its management. The four stages of data quality sophistication are often depicted as this graphic shows.

https://media.edq.com/48d959/globalassets/blog-images/dq-sophistication-curve.png

Over 60% of organizations fall into either the unaware or the reactive stage, allowing significant room for data quality improvement.

Data quality assessment should be a continuous process. The most successful businesses periodically assess the quality of their data and the effectiveness of their current data quality management plan. A regular reassessment of its data’s quality allows a business to react to areas of concern and make improvements when necessary.

3. Profile existing data

Data profiling is the process of reviewing source data, understanding its structure, content, and relationships, and identifying potential challenges for using the data in projects. It is not a one-time effort and should be done regularly on all critical data. Data quality specialists are skilled in data profiling activities and should be able to create and implement a data profiling effort.

Data profiling is a crucial part of:

- Data warehouse and business intelligence (DW/BI) projects—data profiling can uncover data quality issues in data sources, and what must be corrected in the Extraction-Transformation-Loading (ETL) processes.

- Data conversion and migration projects—data profiling can identify data quality issues, which can be addressed in scripts and though data integration tools that copy data from source to target. It can also uncover new requirements for the target system due to hidden business rules.

- Source system data quality projects—data profiling can highlight data which suffers from serious or numerous quality issues, and the source of the issues (e.g., user inputs, errors in interfaces, data corruption).

Data profiling involves:

- Identifying a relevant sample of data from a dataset/database

- Collecting descriptive statistics (e.g., min, max, count and sum) against critical data in the dataset – including statistics on missing or incomplete data

- Collecting data types, length, and recurring patterns for identified critical data

- Tagging data with keywords, descriptions, or categories to confirm meaning and usage

- Discovering metadata and assessing its accuracy against expectations

- Performing data quality assessment processes to evaluate results

- Identifying distributions, key candidates, foreign-key candidates, functional dependencies, embedded value dependencies, and performing inter-table analysis (advanced profiling)

4. Cleanse existing data

Inaccuracies or inconsistencies in data will affect data quality and prevent its use for effective decision-making or streamlining operations. Data cleansing is the process of correcting incomplete or inaccurate information, fixing formatting issues, providing accurate metadata, etc. Issues that require data cleansing include:

- Typos and other errors in data entries

- Incomplete data – missing data – partially completed records

- Inconsistent formatting of addresses or contact information

- Inconsistent use of reference data – different depending on the application or department

- Incomplete or outdated contact information

- Incomplete transaction records

- Duplicate data entries (full duplication or partial)

Resolving (cleansing) data quality errors supports more confident use of data for operations and decisions. There are a variety of tools available to cleanse data, depending on the technical platform and the business objectives, but the focus should be on consistent processes for managing data rather than reliance on tools. Identify and correct data quality issues in source data, before moving it into a target database. Only move confirmed “clean” data into any target file or database

5. Establish a data collection plan for improved data quality management

Because data collection issues are nearly inevitable, develop a clear plan to reduce the occurrence of these issues and address them if they occur. The data quality team should work with data governance and appropriate technology teams to identify the process steps, metrics, and guidelines to prevent poor quality data from entering the environment, and consistently assess the quality of the data currently stored. Methods to include in an effective data collection plan include:

- Using tools and user supports to ensure accuracy upon entry, including creating required fields on forms, using consistent reference data, and providing data definitions at data entry

- Regular, periodic data cleansing processes performed by the data quality team and supported by business data stewards to catch errors or identify inaccurate/incomplete information

- Providing appropriate, regular training to all staff on the concepts, processes, and guidelines for supporting high-quality data collection and usage

A clear data collection and data quality management plan saves valuable time and money (fewer mistakes, less time spent on resolving data quality issues) and increases confidence in data for operational and strategic purposes.

6. Develop a plan for data quality maintenance and improvement

To maintain strong data quality and continually improve data management capabilities across the organization, develop and implement a plan to regularly assess the quality of data and its usability. Data-driven operations and decisions require a continually evolving enterprise approach to data management (data governance, metadata management, data quality).

Any plan for data quality management should include presentation of the results for data profiling, data cleansing, and measuring data quality process effectiveness. Developing a data quality dashboard is an excellent way to deliver this information to various audiences. Regular updates to the dashboard are essential since they can demonstrate the data quality improvements and raise awareness of continuing challenges.

Include data quality responsibilities in all business data stewards’ role descriptions and provide appropriate training in data quality activities for data stewards, under the guidance of the data quality specialists. Doing so will support the establishment of a culture focused on data quality and confirm the need for an enterprise approach to data management and the value of data governance.

Effective plans for data quality maintenance and improvement require consistent support from the entire organization – leadership, business and technical management, and staff. A data-driven business should foster a healthy culture of improvement, one that is comfortable acknowledging when change is necessary and committing to make that change. Acknowledging when issues like flawed data collection or storage methods, or inconsistent data quality monitoring, arise is essential for sustaining business growth. Recognizing these issues, regularly communicating the organizational value of high-quality data and developing an appropriate response for managing data as a true enterprise asset allows the organization to flourish and meet its goals and objectives.

Conclusion

Implementation of data quality management processes across a company can support and be supported by data governance and related efforts, including the enforcement of consistent data standards across the organization. Attention to improving data quality can ensure that data can be more easily used for decision-making and more effective operations.